Unlocking the Power of Free and Open Source AI Coding Tools

I'm usually the last to jump into the latest trend when it comes to development tools. I've been developing websites at rtraction since 2008, meaning I'm an old man in developer years, set in my ways; I'm not pulling any all-nighters and I don't work on the weekend. I reach for jQuery when I need to do some quick Javascript (who has time to learn this week's JS framework?). Get off my lawn! I love my job, and when it's time to do it, I do it right with the tried and true tools that I've curated over the last 16 years.

So, one year ago when a friend linked me to ChatGPT and told me that it can write code, I was skeptical. I thought it was impossible, so I signed up and gave it a challenge, or so I thought.

February 2023:

> Gavin: Make a Drupal 9 controller for a

dashboard that lists reports.

> ChatGPT: *delivers*

> Gavin: mindblown.gif That was the moment that my special interest became Artificial Intelligence. I started using ChatGPT to save on typing, mostly to make boilerplate code. It always seemed a little weird that everything I put in will be part of the next version's training data. So, I started tinkering with OpenAI's GPT-4 API, which is supposedly encrypted and won't be used for training. Then I accidentally spent $12 trying to analyze a CSV file.

December 2023:

> Gavin: Analyze this CSV file: large_file.csv

> GPT-4: //reads gigantic file

> GPT-4: //incurres $12 of API costs

> Gavin: emptywallet.gif That was the moment that my special interest became local large language models.

The problem with locally-installed LLMs was that they were not very good. That is, until Meta's Llama3 was released in April. Llama3 performs better on my laptop than ChatGPT did when I first used it. Here's how to use it yourself:

- Hardware Requirements:

As of now, Llama3 requires at least 5GB of RAM to hold the entire model file. If you have a MacBook M2 Air like me, with 16GB of RAM, you're all set! Ollama, the software that will run the model, also provides instructions for Windows and Linux users on their website. - Download and Install Ollama:

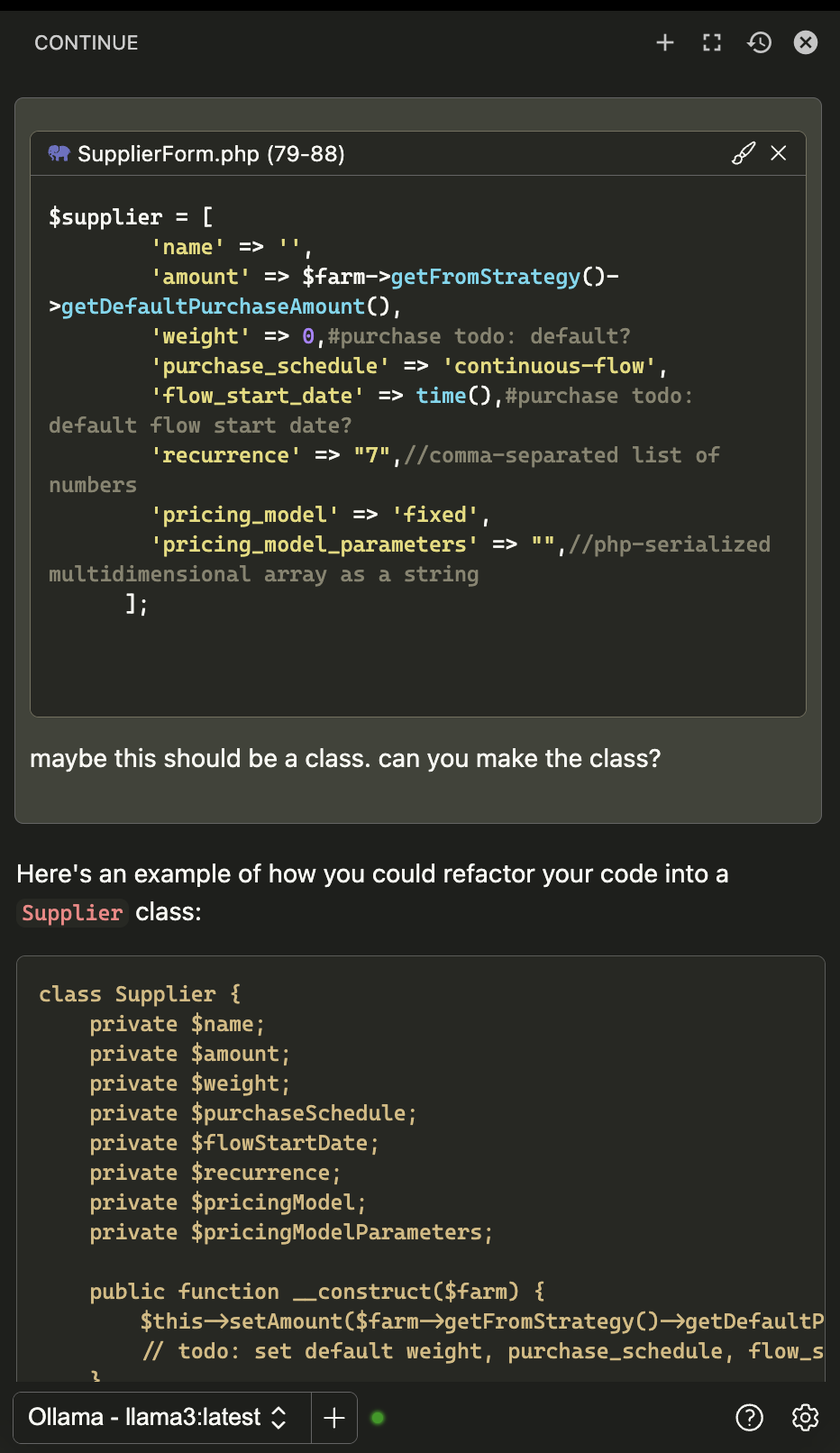

Head over to ollama.com and download and run Ollama. On a Mac, it will place a little llama icon in your system tray. Then, open your terminal and typeollama run llama3:latest. This will download the 5GB model file if this is your first time running the command. Ollama will give you a commandline interface that you can use to chat with Llama3. It will also start up a server running atlocalhost:11434, which you can use to connect other tools to Ollama. - Install Continue in your IDE:

Download and install the Continue extension. For VSCode the extension is in the marketplace. It's also available for PHPStorm. - Configure Continue for Ollama:

Open Continue's settings JSON file by clicking on the cog icon. Then, set the "models" section to the following:

"models": [

{

"title": "Ollama",

"provider": "ollama",

"model": "AUTODETECT"

}

],Now you can highlight some code, right-click (or cmd+shift+P), add the selected code to Continue's context, and you can start using Llama3 to generate code suggestions, modify existing code, or even create entirely new files. The best part is, none of your data is leaving your machine!

April 2024: